本文讨论Kafka的扩缩容以及故障后如何“补齐”分区。实质上先扩容再缩容也是迁移的操作。

环境

Kafka 版本2.6。

扩容

扩容也就是新增节点,扩容后老的数据不会自动迁移,只有新创建的topic才可能会分配到新增的节点上面。如果我们不需要迁移旧数据,那直接把新的节点启动起来就行了,不需要做额外的操作。但有的时候,新增节点后,我们会将一些老数据迁移到新的节点上,以达到负载均衡的目的,这个时候就需要手动操作了。Kafka提供了一个脚本(在bin目录下):kafka-reassign-partitions.sh,通过这个脚本可以重新分配分区的分布。脚本的使用比较简单,提供一个JSON格式的分配方案,然后传给脚本,脚本根据我们的分配方案重新进行平衡。

举个例子,假如现在集群有181、182两个broker,上面有4个topic:test-1,test-2,test-3,test-4,这些topic都有4个分区,2个副本,如下:

1 | 两个broker |

现在扩容了,新增了两个节点:183和184。扩容后,我们想要把test-3,test-4迁移到183,184上面去。

首先我们可以准备如下JSON格式的文件(假设文件名为topics-to-move.json):

1 | { |

里面写明想要重新分配的topic。然后执行如下命令:

1 | ➜ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 --topics-to-move-json-file topics-to-move.json --broker-list "183,184" --generate |

可以看到上面的命令会列出当前分区的分布情况,并且会给出一个建议的新分区分配方案,都是JSON格式的,内容也很简单。然后我们将建议的分配方案保存为一个文件(假设文件名为expand-cluster-reassignment.json),当然我们也可以手动修改这个方案,只要格式正确即可。然后执行下面命令使用新的方案进行分区重分配:

1 | ➜ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 --reassignment-json-file expand-cluster-reassignment.json --execute |

这样就提交了重分配的任务,可以使用下面的命令查看任务的执行状态:

1 | ➜ bin/kafka-reassign-partitions.sh --bootstrap-server localhost:9092 --reassignment-json-file expand-cluster-reassignment.json --verify |

完成后,我们检查一下新的test-3和test-4的分区分配情况:

1 | ➜ bin/kafka-topics.sh --describe --topic test-3 --zookeeper localhost:2181/kafka_26 |

可以看到,这两个topic的数据已经全部分配到183和184节点上了。

缩容

从上面可以看到,其实数据分配完全是由我们自己把控的,缩容也只是数据迁移而已,只需要提供正确的迁移方案即可。一般生产环境很少有缩容的,但有一个场景比较常见,就是某个节点故障了,且无法恢复。以前的文章提到过,节点故障后,这个节点上的分区就丢了,Kafka不会自动在其它可用节点上重新创建一个副本,这个时候就需要我们自己手动在其他可用节点创建副本,原理和扩容是一样的。接着上面的例子,比如现在184节点故障了,且无法恢复了,而test-3和test-4有部分分区是在该节点上面的,自然也就丢了:

1 | 节点挂了,zk中的节点已经没了 |

这个时候,我们准备把test-3原来在184上的分区分配到181上面去,把test-4在184上的分区分配到182上去,那分配方案就是下面这样的:

1 | ➜ cat expand-cluster-reassignment.json |

然后执行分配方案即可:

1 | 执行分配方案 |

kafka manager页面操作

页面操作不支持批量操作topic,需要逐个topic进行操作。

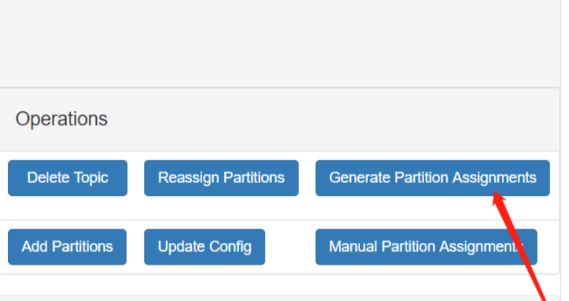

1,进入topic视图,点击 Generate Partition Assignments 生成分区分配。进入分区分配界面,

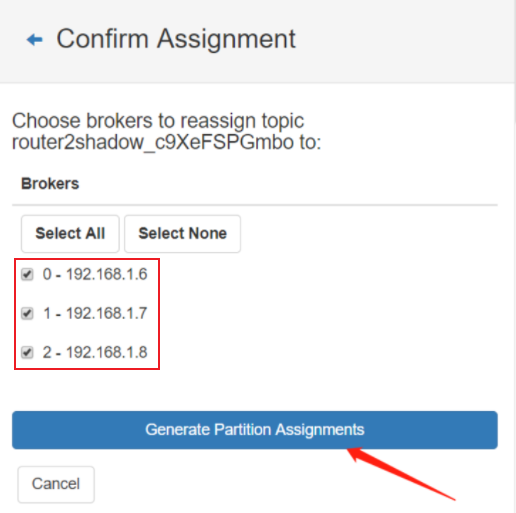

2,对该topic需要占用的节点进行勾选,再次点击 Generate Partition Assignments

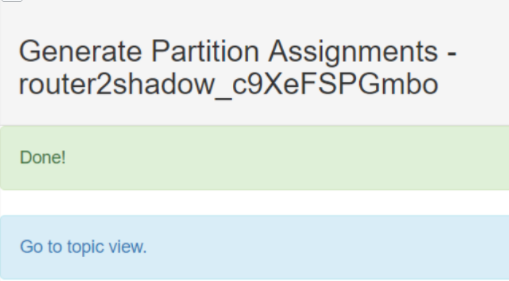

3,分区完成 , go to topic view

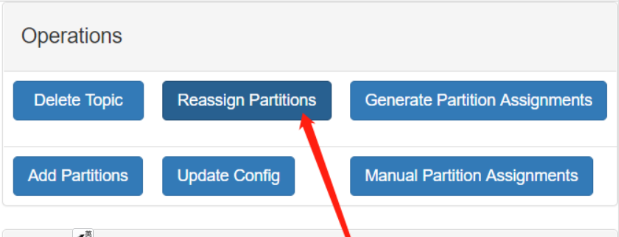

4, 重新分配。 Reassign Partitions

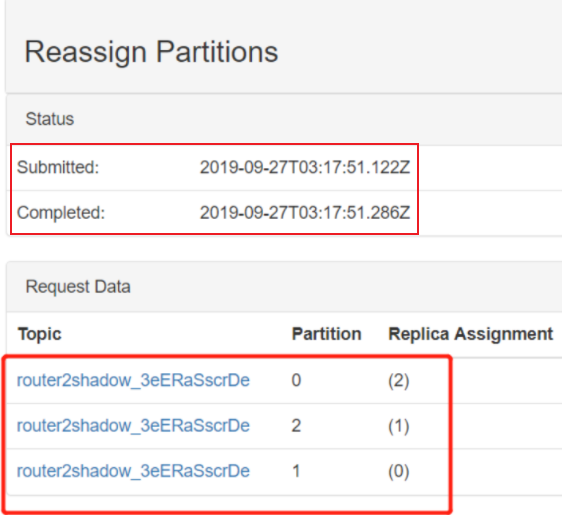

5,go to reassign partitions 转到重新分配分区

6,验证查看

总结

不管扩容还是缩容,或者是故障后手动补齐分区,实质都是分区重分配,使用kafka-reassign-partitions.sh脚本即可。该脚本使用也非常简单:

- 先提供一个JSON格式的需要重分配的topic列表,然后执行

--generate生成迁移方案文件; - 然后使用

--execute执行新的分配方案; - 最后使用

--verify查看分配方案执行进度。

如果对于分配方案文件格式很熟悉,可以跳过1.